AI on Edge: On-device inferencing with Google Coral Device

Introduction

Artificial Intelligence (AI) and Machine Learning (ML) technologies are becoming increasingly the disruptive trends the world is facing. Although running or inferencing using the AI & ML models is increasingly becoming affordable, the training or building of such models is still a bottleneck in terms of computation cost and resources required. Various technologies are being used to address this bottleneck such as data parallelism, model parallelism, GPU-accelerated computing, distributed computing, etc. Besides, there is an explosion of data due to the advancements of Internet of Things (IoT) devices. This has led to various new challenges such as

- Latency and networks costs associated with seamless transfer of data between the points of collection and training/inference

- Privacy related concerns as the data is being shared recklessly across various devices

- Scalability related issues as the number of connected devices increases.

To this end, performing machine learning at edge devices has recently become a focal point for various players in the market. Performing ML at edge devices essentially means that the inference will be carried out at the edge device. Performing a full-scale model training at edge devices is still not a norm due to various computational limitations posed by the edge devices. There is an ongoing debate that what device should be considered as an 'edge' device, a device that collects the data such as a sensor or a device that ensembles the data from sensors such as a gateway. However, it is widely accepted that a device that stays very close to where the data is being generated (thus potentially reducing the data sharing costs) can be considered as an edge device.

In this article, I am going to discuss about the Google Coral Edge TPU USB Accelerator which was released almost a year ago by Google as part of its toolkit for performing AI at edge.

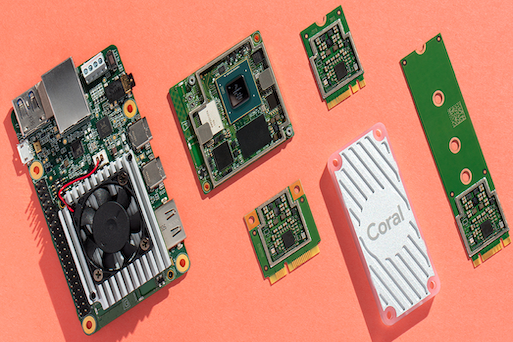

Google Coral Edge TPU USB Accelerator

As per the product datasheet, the device can perform 4 trillion operations per second (TOPS), using 0.5 watts for each TOPS (2 TOPS per watt). The device is compatible with Linux Debian 6.0 or higher, Mac OS 10.15 & Windows 10, and supports Python 3.5, 3.6, or 3.7. Although it has certain restrictions on the way a ML model is to be built for this device, it fully supports the Tensorflow Lite models. More information on this can be obtained from the official site.

Google Coral Edge TPU USB Accelerator

Running Object Recognition model with USB Accelerator

I have trained a simple object recognition Tensorflow Lite model in order to try running the model on USB Accelerator. The model can classify whether a mobile phone is an Apple or an Android mobile when the mobile screen is lit up. Thus it can recognise only the following three features:

- An Apple mobile

- An Android mobile

- Background

The model is trained with 1775 images in my personal Mac computer.

There is not much attention being paid for the model training itself as it is not the focus of this article.

However, the important thing is that I was able to run the model inference in the USB accelerator.

The following gif and video show the real-time operation of the USB Accelerator running the object recognition model.

The USB Accelerator is connected to my computer getting the input data from the inbuilt video camera of the computer.

Highlights

Google has made it extremely simple to run a ML model on its Edge TPU USB accelerator. The device can be easily integrated with any other existing supported systems to run a ML model on edge. As there is no data being shared across servers in order to perform model predictions, the device can be deployed in an offline environment where there is no internet connectivity. The model inference is being performed in an efficient way at the device itself. Although I have not benchmarked the cost of predictions during the model inference, some such results can be seen in the official benchmark results. Although it was possible to perform inference at the edge, it is still necessary to train the model often using large-scale computing resources. Otherwise, the model may potentially become irrelevant over a large span of time. Regardless of this downside, performing ML inference at the edge can bring numerous benefits to various areas such as predictive maintenance, surveillance & monitoring, autonomous vehicles, etc.